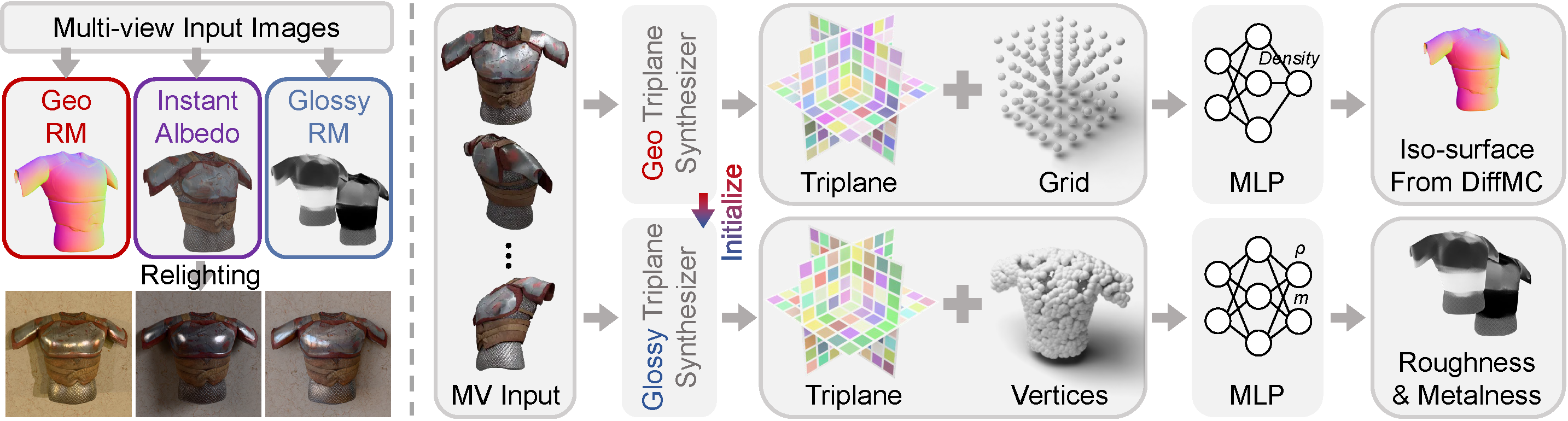

Overview of our pipeline

(left) Starting from sparse-view input images generated by a diffusion model, ARM separates shape and appearance generation into two stages. In the geometry stage, ARM uses GeoRM to predict a 3D shape from the input images. In the appearance stage, ARM employs InstantAlbedo and GlossyRM to reconstruct PBR maps, enabling realistic relighting under varied lighting conditions. (right) Both GeoRM and GlossyRM share the same architecture, consisting of a triplane synthesizer and a decoding MLP. GeoRM is trained to predict density and extracts an iso-surface from the density grid with DiffMC, while GlossyRM is trained to predict roughness and metalness. GlossyRM is trained after GeoRM and initializes with the weights of GeoRM at the start of training.